✅Clustering in Machine Learning - Explained in simple terms with implementation details (code, techniques and best tips).

A quick thread 👇🏻🧵

#MachineLearning #DataScientist #Coding #100DaysofCode #hubofml #deeplearning #DataScience

PC : ResearchGate

A quick thread 👇🏻🧵

#MachineLearning #DataScientist #Coding #100DaysofCode #hubofml #deeplearning #DataScience

PC : ResearchGate

1/ Imagine you have a bunch of colorful marbles, but they are all mixed up and you want to organize them. Clustering in machine learning is like finding groups of marbles that are similar to each other.

2/ You know how some marbles are big and some are small? And some are red, while others are blue or green? Clustering helps us put marbles that are similar in size or color together in the same groups. So, all the big red marbles might be in one group, and rest in another group

3/ Machine learning uses mathematics to look at the marbles and decide which ones should be in the same group. Just like when you look at the marbles and put them together based on how they look alike.

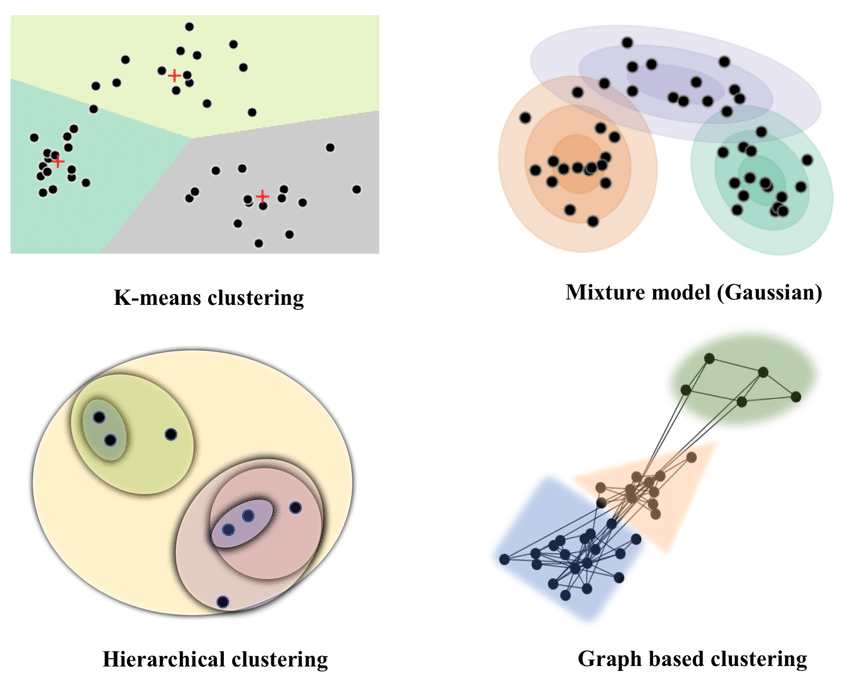

4/ Clustering in ML refers to the process of grouping similar data points together based on certain features or characteristics they share. The goal of clustering is to identify inherent patterns and structures within a dataset without any prior knowledge of the class labels

5/ Clustering aims to discover natural groupings within the data, where data points within the same group are more similar to each other than to those in other groups.Clustering is an unsupervised learning technique.

8/ Partitioning Clustering:

Partitioning Clustering involves dividing data points into distinct non-overlapping subsets or clusters. These algorithms aim to optimize a certain criterion, often the distance between data points within the same cluster.

Partitioning Clustering involves dividing data points into distinct non-overlapping subsets or clusters. These algorithms aim to optimize a certain criterion, often the distance between data points within the same cluster.

10/ K-Medoids Algorithm:K-Medoids is similar to K-Means but uses actual data points as cluster representatives (medoids) instead of centroids. It's more robust to outliers since it selects real data points as cluster centers.

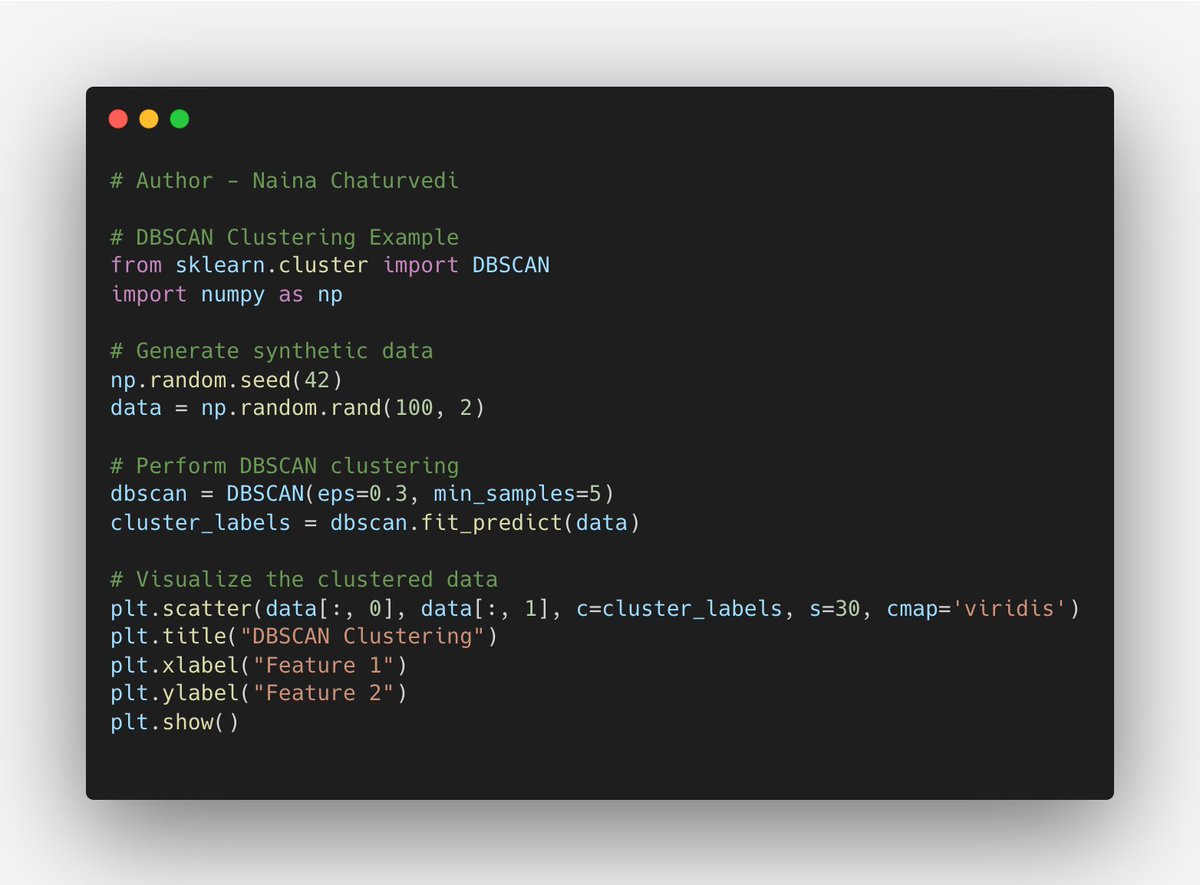

11/ Density-Based Clustering:

Density-Based Clustering identifies clusters by considering regions of higher data point density. It's effective in finding irregularly shaped clusters and is less influenced by noise.

Density-Based Clustering identifies clusters by considering regions of higher data point density. It's effective in finding irregularly shaped clusters and is less influenced by noise.

13/ Model-Based Clustering:

Model-Based Clustering involves fitting statistical models to data and using them to identify clusters. It assumes that data points are generated from a mixture of underlying probability distributions.

Model-Based Clustering involves fitting statistical models to data and using them to identify clusters. It assumes that data points are generated from a mixture of underlying probability distributions.

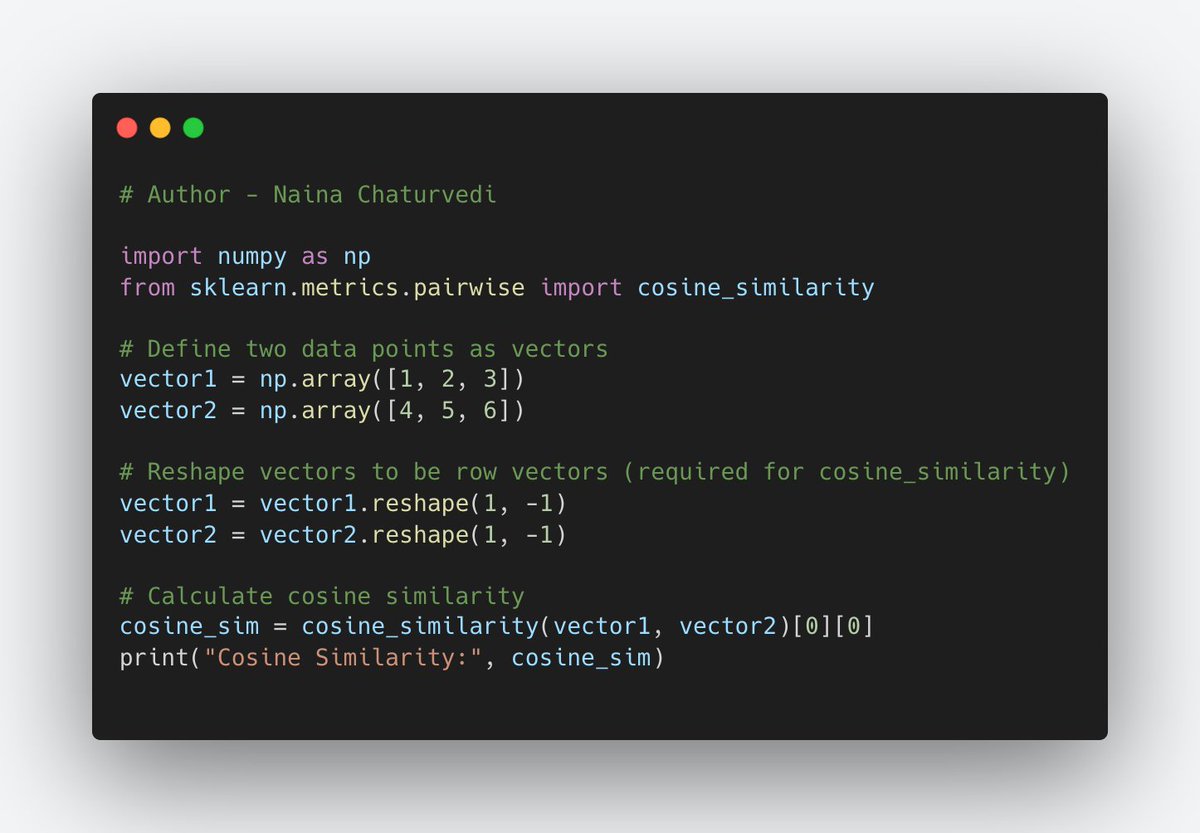

15/ Distance Metrics:

Distance metrics are mathematical formulas that help us measure how far apart or similar two data points are in a given space. They are crucial in clustering, classification, and similarity analysis.

Distance metrics are mathematical formulas that help us measure how far apart or similar two data points are in a given space. They are crucial in clustering, classification, and similarity analysis.

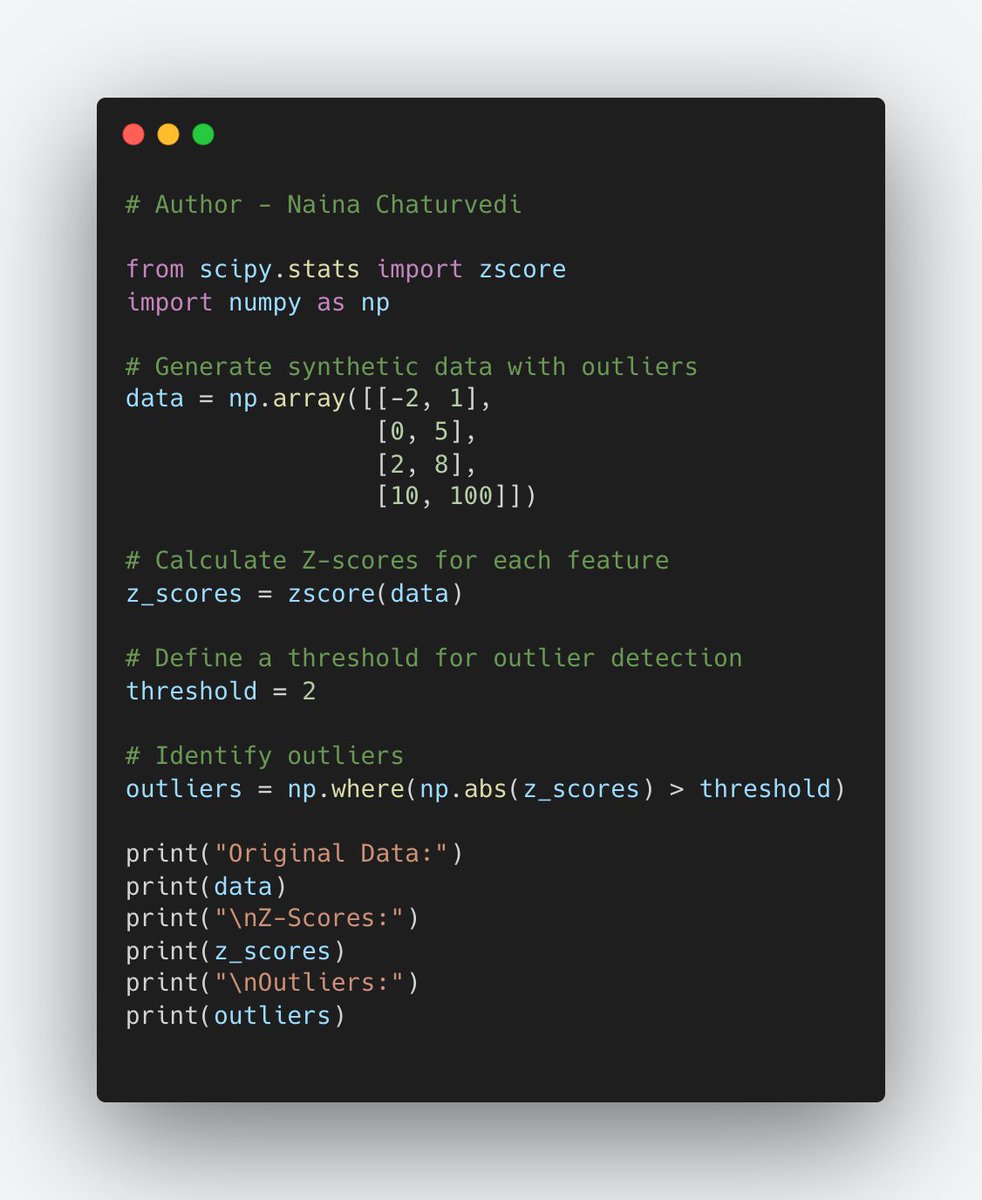

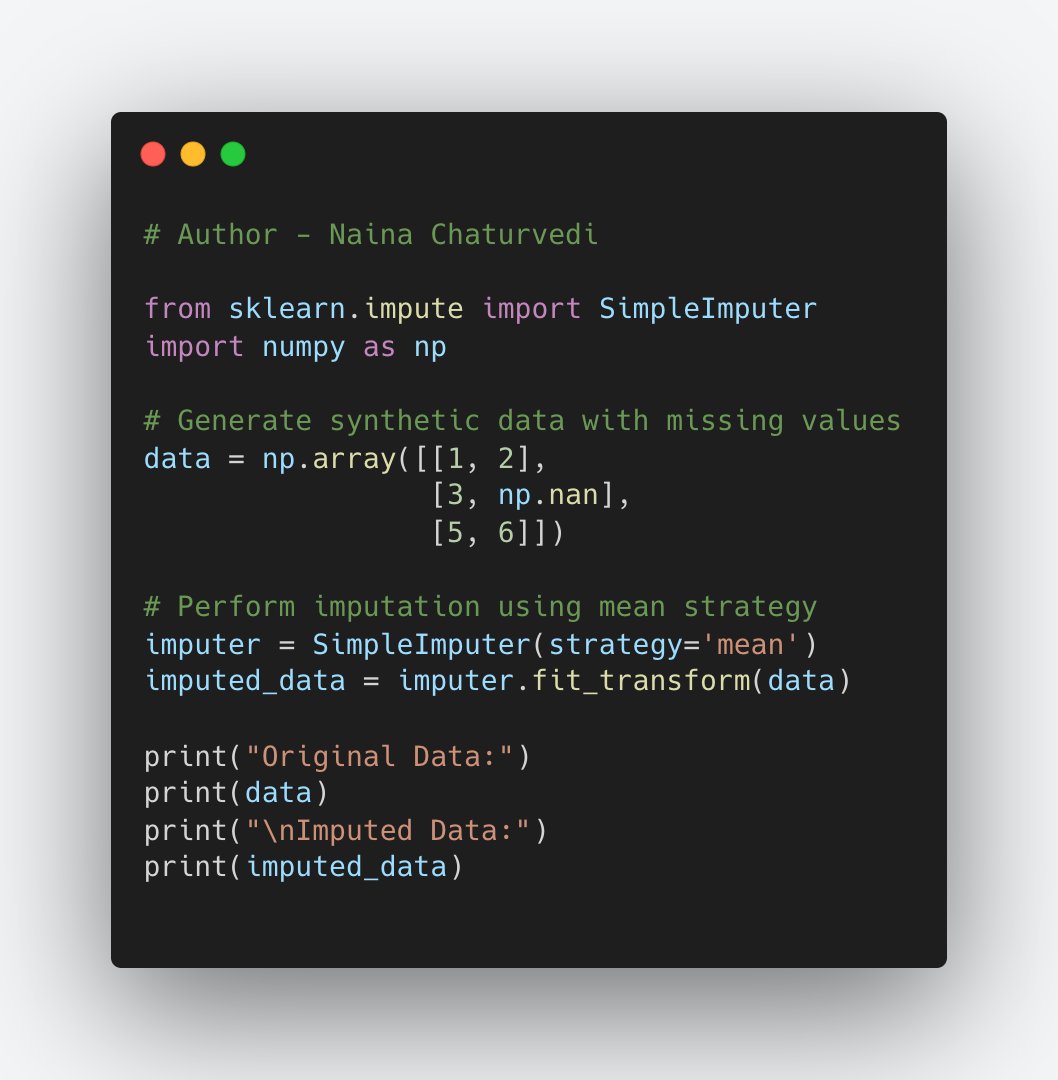

19/ Data preprocessing is a crucial step in preparing data for clustering algorithms. Clean and well-preprocessed data can lead to more accurate and meaningful clusters.

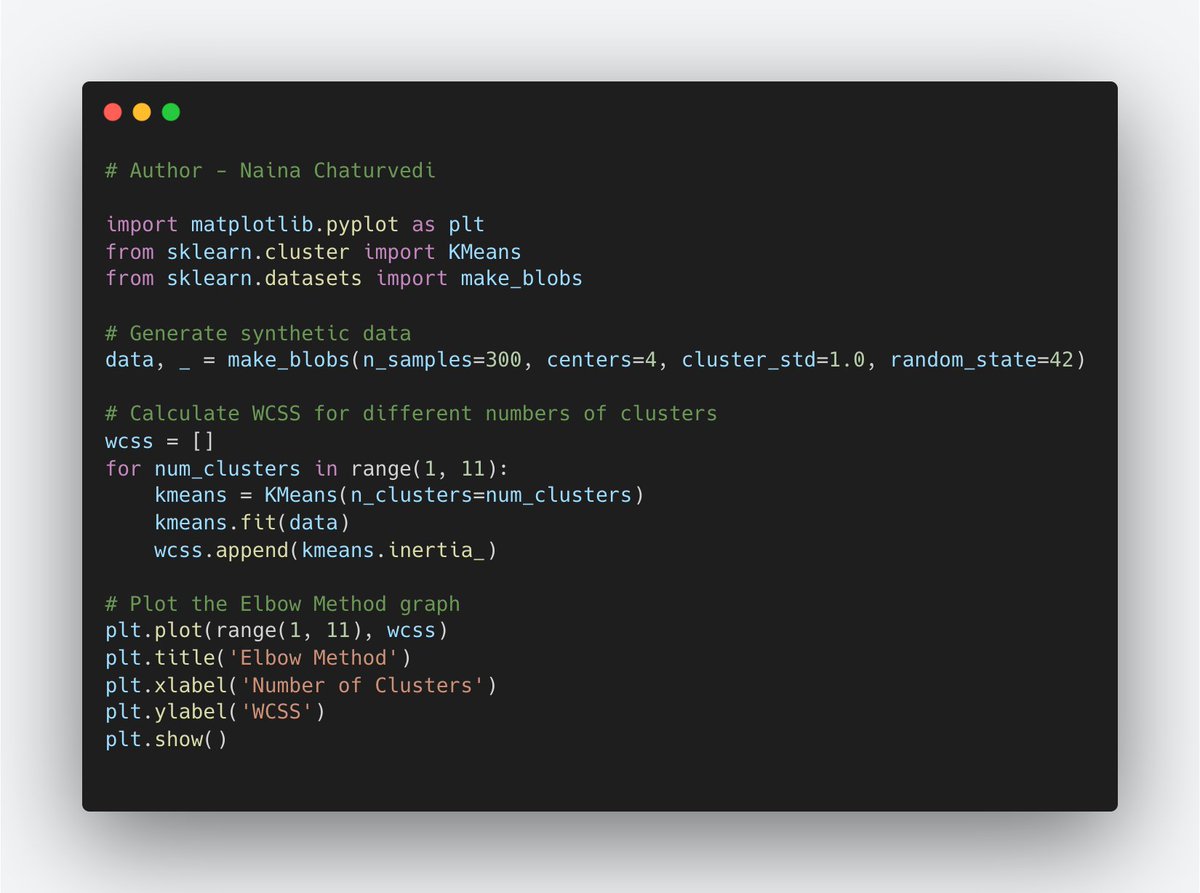

23/ Elbow Method:

Description:The Elbow Method is a graphical technique to determine the optimal number of clusters for the k-means algorithm. It involves plotting the number of clusters against the within-cluster sum of squares (WCSS).

Description:The Elbow Method is a graphical technique to determine the optimal number of clusters for the k-means algorithm. It involves plotting the number of clusters against the within-cluster sum of squares (WCSS).

github.com/Coder-World04/…

GitHub - Coder-World04/Complete-Machine-Learning-: This repository contains everything you need to become proficient in Machine Learning

This repository contains everything you need to become proficient in Machine Learning - GitHub - Cod...

naina0405.substack.com

Ignito | Naina Chaturvedi | Substack

Thousands of subscribers. Data Science, ML, AI and more... Click to read Ignito, by Naina Chaturvedi...

جاري تحميل الاقتراحات...