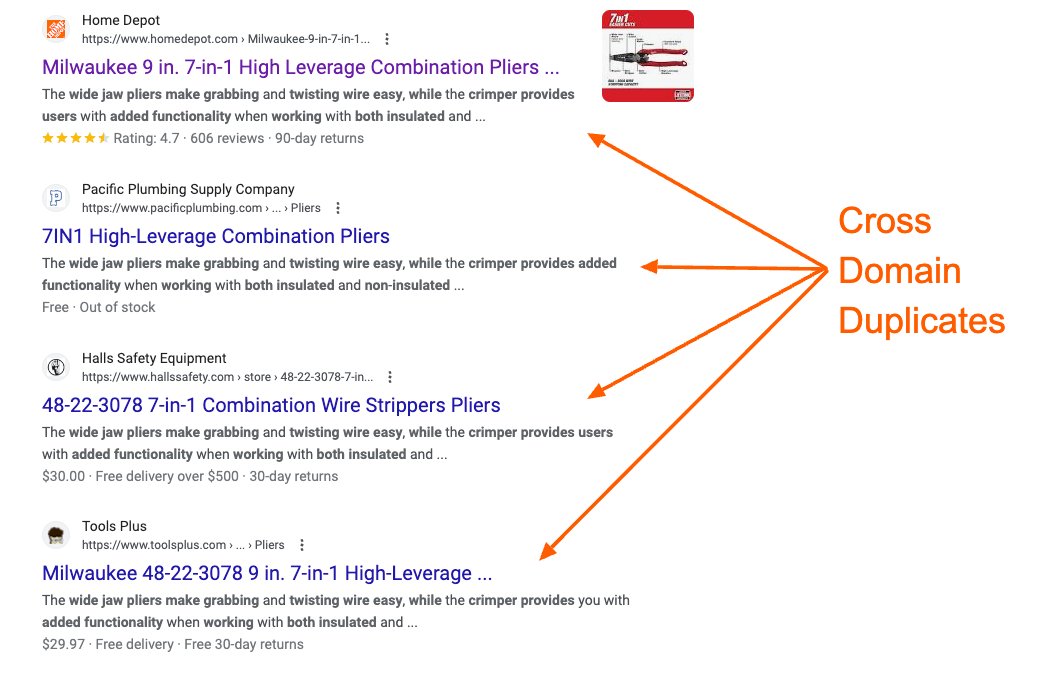

Cross-domain duplicates exists when there are similar or exact matches on other sites. Similar to standard duplicate content, this can still cause SEO issues with your pages: developers.google.com

Google won't index all the versions of duplicates. It may index some but if it finds enough examples, it may start to filter them out of the search results.

This means your site could be at risk for indexation issues if you aren't one of the ones selected.

This means your site could be at risk for indexation issues if you aren't one of the ones selected.

The most common use case of this is ecommerce sites that use the same manufacturers description and product details. Many sites do this so it makes the pages less unique across the indexing set.

Other examples are syndication and reposts of articles to platforms like Medium.

Other examples are syndication and reposts of articles to platforms like Medium.

The best way to avoid this is to of course always ensure your content is unique.

However, if you want to fix it at scale, you might need to come up with programmatic ways to differentiate your content (dynamic headers, internal linking modules etc).

#SEO #technicalSEO

However, if you want to fix it at scale, you might need to come up with programmatic ways to differentiate your content (dynamic headers, internal linking modules etc).

#SEO #technicalSEO

جاري تحميل الاقتراحات...