the goal of this architecture is to keep as much as possible all the knowledge learned by the original model.

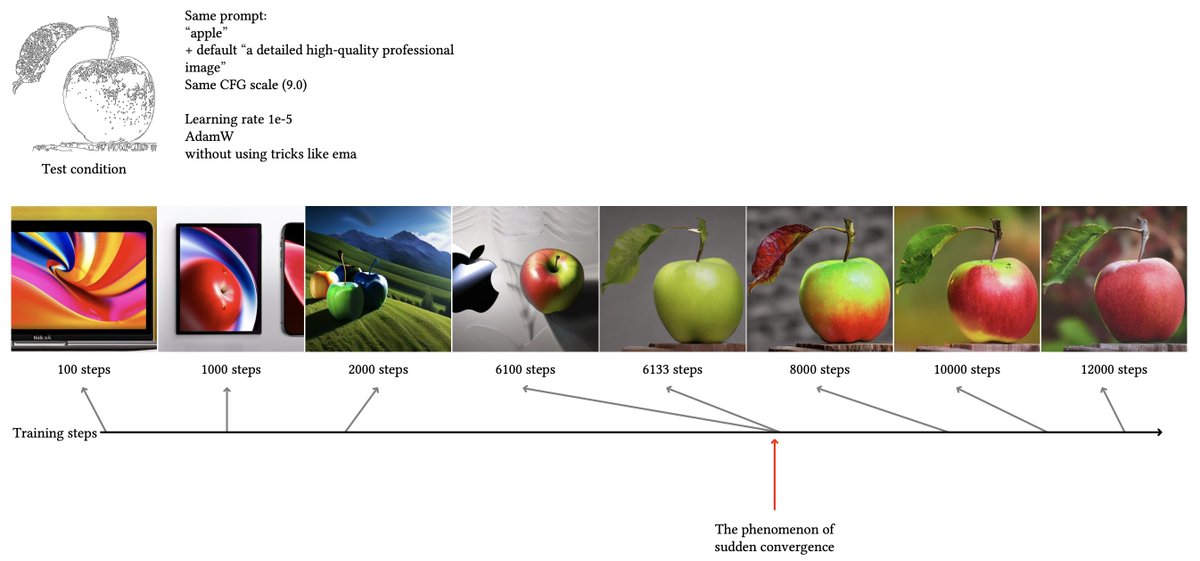

the trainable network learns how to perform the control in a progressive way thanks to the use of zero convolutions.

the trainable network learns how to perform the control in a progressive way thanks to the use of zero convolutions.

zero convolutions are 1D convolutions with weights and biases initialized to 0s.

note how at the beginning of the training ControlNet will not affect the original network at all, but as it gets trained it will progressively start influencing the generation with the condition.

note how at the beginning of the training ControlNet will not affect the original network at all, but as it gets trained it will progressively start influencing the generation with the condition.

in this case, the cloned networks are the U-Net encoder/middle layers, and the results of the each trainable copy are fed in each middle/decoder block.

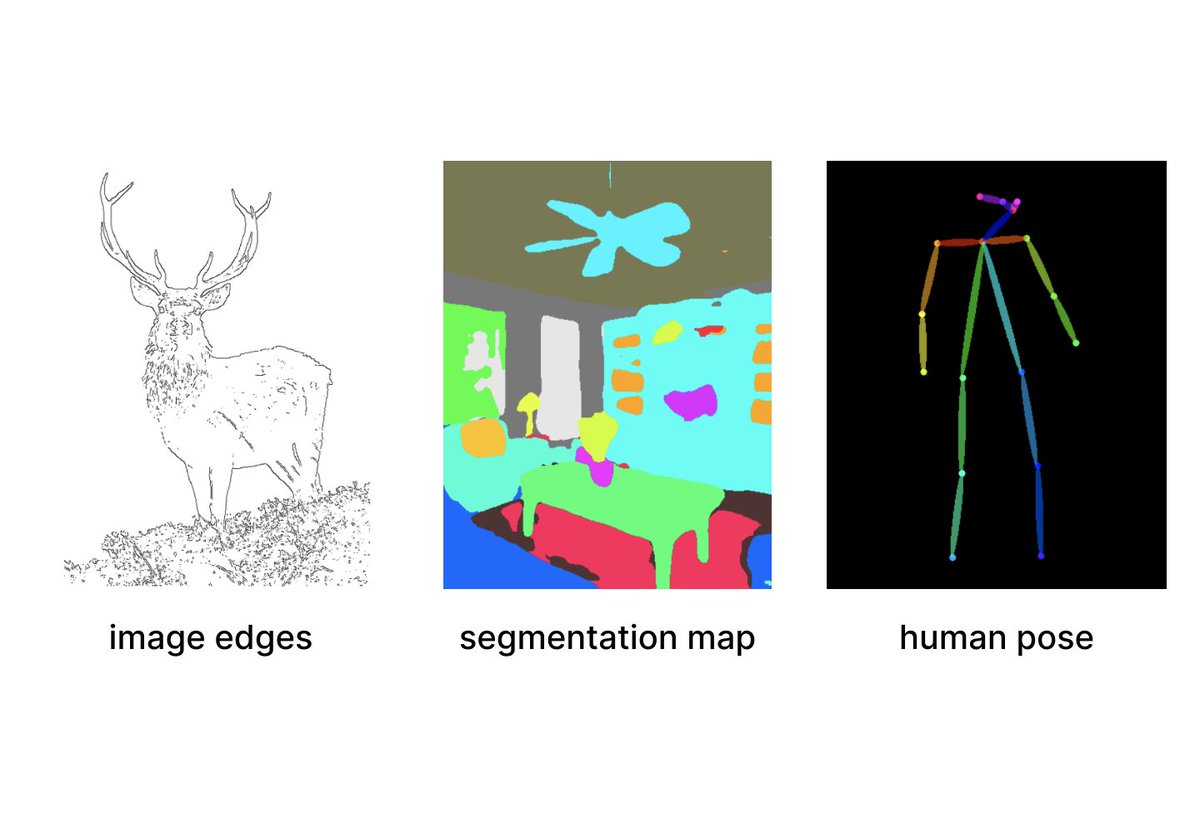

it is worth mentioning that the authors used a convolutional architecture to encode the condition image before feeding it within the cloned U-Net.

the way how this work enables us to control the structure from the images we generate is truly interesting.

here are some of our favorite results so far:

here are some of our favorite results so far:

big s/o to @lvminzhang for this mind-blowing work and for making it open to everyone.

we're adding this method to the KREA Canvas, can't wait to see what y'all create with it! ⚡️

1x1 convolutions, not 1D* :/

جاري تحميل الاقتراحات...